Google's betting invisible AI watermarks will be just as good as visible ones. The company is continuing its week of Gemini 3 news with an announcement that it's bringing its AI content detector, SynthID detector, out of a private beta for everyone to use.

This news comes in tandem with the release of nano banana pro, Google's ultrapopular AI image editor. The new pro model comes with a lot of upgrades, including the ability to create legible text and upscale your images to 4K. That's great for creators who use AI, but it also means it will be harder than ever to identify AI-generated content.

We've had deepfakes since long before generative AI. But AI tools, like the ones Google and OpenAI develop, let anyone create convincing fake content quicker and cheaper than ever before. That's led to a massive influx of AI content online, everything from low-quality AI slop to realistic-looking deepfakes. OpenAI's viral AI video app, Sora, was another major tool that showed us how easily these AI tools can be abused. It's not a new problem, but AI has led to a dramatic escalation of the deepfake crisis.

Read more: AI Slop Has Turned Social Media Into an Antisocial Wasteland

That's why SynthID was created. Google introduced SynthID in 2023, and every AI model it has released since then has attached these invisible watermarks to AI content. Google adds a small, visible, sparkle-shaped watermark, too, but neither really help when you're quickly scrolling your social media feed and not vigorously analyzing each post. To help prevent the deepfake crisis (that the company helped create) from getting worse, Google is introducing a new tool to use to identify AI content.

You can now ask Gemini if an image was created with AI. But it will only recognize if it's made with Google's AI.

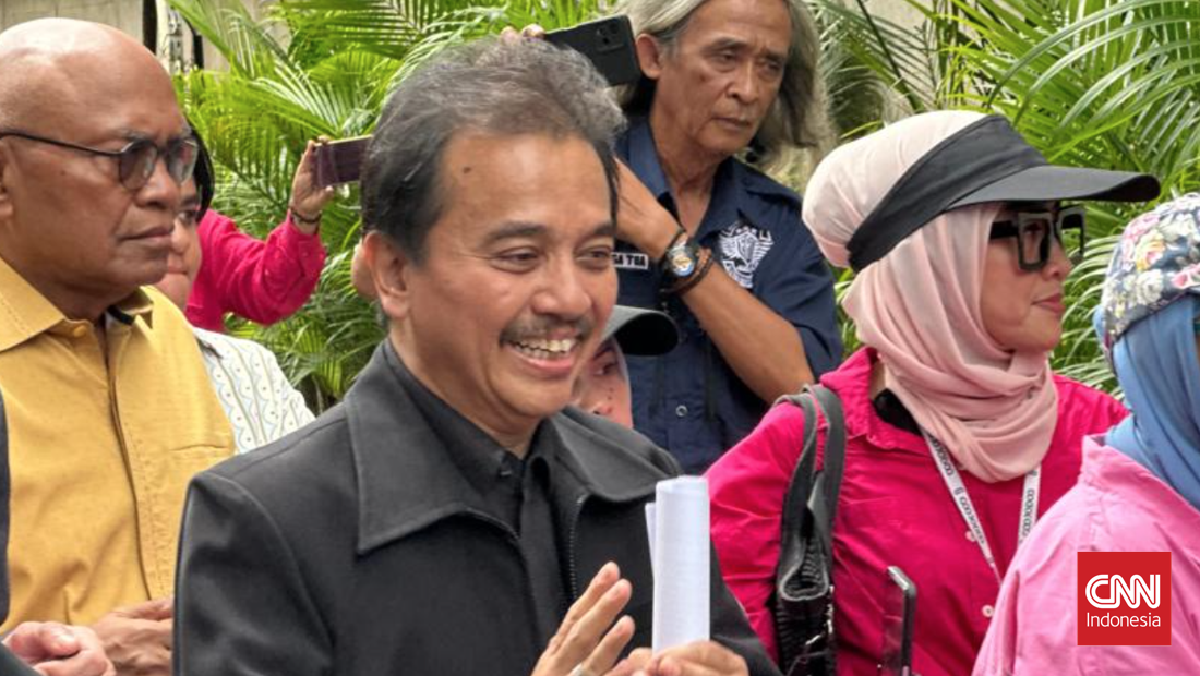

Google/Screenshot by CNETSynthID Detector does exactly what its name implies; it analyzes images and can pick up on the invisible SynthID watermark. So in theory, you can upload an image to Gemini and ask the chatbot whether it was created with AI. But there's a huge catch -- Gemini can only confirm if an image was made with Google's AI, not any other company's. Because there are so many AI image and video models available, that means Gemini likely isn't able to tell you if it was AI-generated with a non-Google program.

Right now, you can only ask about images, but Google said in a blog post that it plans to expand the capabilities to video and audio. No matter how limited, tools like these are still a step in the right direction. There are a number of AI detection tools, but none of them are perfect. Generative media models are improving quickly, sometimes too quickly for detection tools to keep up. That's why it's incredibly important to label any AI content you're sharing online and to remain dubious of any suspicious images or videos you see in your feeds.

Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source.

For more, check out everything in Gemini 3 and what's new in nano banana pro.

4 hours ago

2

4 hours ago

2